Continuing backward in time (from the Newton, General Magic, and PenPoint in the 1990s) to the late 1970s, my nostalgic trip backwards through technology last week dropped me on the Apple ][, and the big question of why the computer industry repeatedly ran out of memory addresses?

My first computer was an Apple ][+ around 1983, nearly 40 years ago. After a week diving into how it worked, and thanks to the internet to fill that thirst of knowledge, I now know more about this computer than I did back then. I even found the issue of Byte magazine from 1977 where Woz himself explained what the computer could do.

In hindsight, what surprised me the most is that before I even owned my Apple ][, 64K of memory was already commonplace and by 1985 I had a 512K Macintosh, so it should have been glaringly obvious to the adults at that time that memory in computers was not the constraint it had been in the 1960s and 1970s.

In hindsight, what I can’t figure out is why neither Intel, Motorola, MOS, nor Zilog grew the address space on their chips before the more complex task of moving from 8 bit to 16 bit to 32 bit data sizes.

In this last week I dove as far down as the Visual 6502 project, which has deconstructed every gate on the MOS 6502 (the microprocessor in the Apple ][, ][+, and //e, as well as the Atari 2600, Commodore PET, and eventually the Nintendo NES and Gameboy). Making any changes to this chip, the 8086, 8600, and Z80 were all incredibly challenging, as all were designed by hand, physically drawn layer by layer by hand.

But growing the address bus from 16 to 24 bits only required adding a handful of new instructions which added one more byte of address, and only required growing the instruction pointer to by 8 bits. No changes were required on the other registers if the goal was simply to address 16MB instead of 64K.

16MB was a the typical RAM size in the ads in the February 1991 issue of BYTE, and thus such a design change would made programming in the 1980s far easier.

Pondering this alternative history, if the people at MOS could have seen the future, they could have had a 652402 ready by 1980, and after the Apple //e could have been a far bigger upgrade, the mythical Apple //4 in my title, with a wider bus to add up to 16MB of memory, with one big flat, simple memory space.

I suspect that would have made the Apple // a lot more competitive to the IBM/DOS computers, and I suspect that would have avoided the mess of the 80286 and its kludge of segmented memory.

All in all, I’m at a loss to understand why the microprocessor industry didn’t see this problem as important enough to solve, with a progression from 16-bit addresses to 24-bit, then 32-bit, then 48-bit.

In my alternative timeline the 80208 would have 24 bit addresses (copying the 652402), the 80386 would have 32 bit address, and by the Pentium 48 bit would be the normal. Motorola came closest to this vision, with the 680000 having 24 bit addresses, but that was a chip with 32-bit registers and which read 32 bits at a time from memory, and thus again was under-designed in terms of memory access, with just 24 bit addresses.

If you worked in the microprocessor industry back then, please tweet me and explain why history didn’t follow this path.

UPDATE: 3 days after posting, Bell Mensch of The Western Design Center answered the questions in this post. Bill is not only one of the designers of the 6502, but he was one of the “defectors” from Motorola who worked on the 6800 too. That, and Bill’s company has sold billions of 6502s since the 1970s, including the W65C02 in the Apple IIc and W65C816S in the Apple IIgs.

Long story short, the computer industry looked to 32-bit processors at the next logical step while the chip producers looked at the 6502, 6800, Z80, etc. as microcontrollers, serving a market where code was measured in bytes and RAM in K. Or in other words, the computer industry saw 32-bit microprocessors coming in the early 1980s. With those chips came 24-bit (to start with) or 32-bit (eventually) address buses.

Over the last 3 days I also came across apple2history.org, which explained that Apple expected the Apple II to be long gone by 1980. They nearly bet the company on the Apple ///, and didn’t bother updating the ][+ to the IIe until the /// was an obvious failure. Then they again nearly bet the company on the Lisa and the Macintosh, again mostly ignoring the II series except for the shrink in size and cost of the IIc.

Thus the best answer to my question is that the foresight of the time said jump to 32-bits, while my hindsight would have pushed toward a more incremental set of improvements.

And that is perhaps a lesson for entrepreneurs to learn here. Move fast and break things is a commonplace mantra is software startups of the 21st Century. Breaking things is not something hardware companies can afford to do. Moving fast is hard for them as well. But the 6502 team themselves designed a chip in a year. Steve Wozniak similarly designed the Apple I in a year, and shipped the Apple II a year later. In hindsight, what I wonder is why Apple didn’t keep that pace? Why not incremental Apple ///, /V, V, V/, V//, etc. each year, pushing MOS and WDC for 2MHz, 3MHz, 4MHz, incremental speed-ups, plus an op-code here or there for small improvements? That alternative history path might then have had someone propose the 65(24)02 idea of more memory without segmentation.

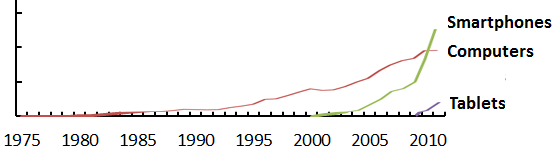

But no, waterfall design was the norm for both hardware and software in the 20th Century. It took the internet and software as a service to break that model for software, and a few years beyond that to break that habit for smartphones and tablets, the ubiquitous computers of today.

UPDATE: One month later, I thought I was finished with story, but then stumbled on the Synertek page on Wikipedia. Turns out Apple didn’t buy 6502s from MOS, they bought them from Synertek, a second source provider. Why Synertek? Probably because they were local to Apple, but even more likely because Synertek gave Apple 30 days credit on purchases, back in 1976 when the only Apple product was the Apple kit computer (a.k.a. the Apple I).

More relevant to my original question about why Apple never asked for a 6502 upgrade, a 2013 “Oral History of Robert “Bob” Schreiner”, founder of Synertek provided that answer:

I got a call from Steve Jobs. And Steve called up and said, “Well, we like your product, we bought a lot of parts, but the time has come to go to a 16-bit machine. Are you going to design a 16-bit version of the 6500?” And I said, “No.” I said, “Would you be willing to fund it?” And he said, “No.” I said, “Well, let me tell you, to take on a project like that is absolutely betting the farm, and I can’t afford to do it, and I don’t think my board would let me do it. So the answer is no.” And he said, “Well, you have to understand that that means you’re forcing us to switch to the 68000.” I said, “Yeah, I knew that was going to happen someday, Steve, but there’s nothing I can do to prevent it. I don’t have the resources to build—design, build, and support a 16-bit machine. Even though you’re a great customer, give us lots of orders, I just can’t afford to take that gamble.”

And there is my answer. Jobs did ask. But Synertek hadn’t designed the 6502, didn’t have CPU designers on staff, and Bob apparently either didn’t point Steve to MOS or didn’t remember doing that. MOS by then was owned by Commodore, Apple’s direct competitor, and Jobs never did anything to help a competitor.

UPDATE: Two years later, after signed copies of the book documenting this rabbit hole, after thinking there wasn’t any history yet uncovered, there was indeed a very interesting bit of hidden history… the Synertec SY6516.

Was this Bob Schriner’s response to that visit by Steve Jobs? What this instead a response to Atari asking for a better chip for their PC? When exactly this design was floated is uknown. All that is knows is that this SY6516 is not a chip that ever went into productions. That overview was marketing to see if there was market demand. The Motorola 68000 won the whole industry’s attention, except of course from IBM.